Scheduled Mutations with Kyverno

Resource mutation is a valuable ability and can be used to solve many different use cases, some of which I covered in the past here and here. The thing most mutations have in common, however, is that there needs to be some event to occur which triggers the mutation. This event is most commonly an AdmissionReview request resulting from an operation performed on a resource such as a creation, update, or deletion. There are also some cases where you want to perform a mutation on a schedule, for example to scale some Kubernetes Deployments to zero on a nightly basis or annotate Namespaces. In this short-ish post, I'll show one neat way I came up with to use Kyverno to perform mutations on a scheduled basis.

Kyverno does not have a native ability to perform a mutation on a scheduled basis. But, fortunately, there is another mechanism within Kyverno which does: cleanup policies. A cleanup policy is a Kyverno policy type which allows the user to request Kyverno perform a cleanup (i.e., deletion) of resources based upon a schedule. The schedule is given in cron format (because cleanup policies are actually powered by CronJobs), so when the scheduled time occurs, Kyverno select the resources defined in the policy and removes them.

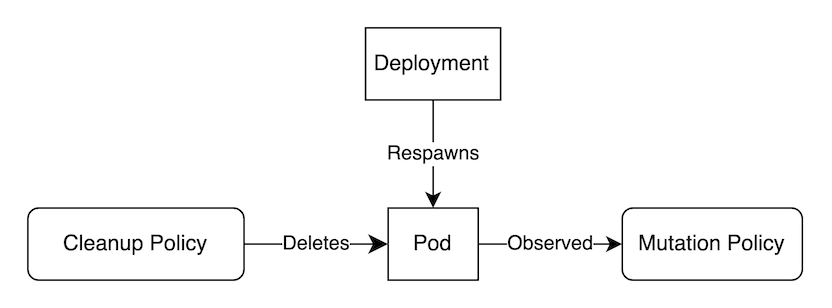

Cleanup policies result in deletion events. And now that we have a scheduled deletion, we can harness this deletion as the input for a separate mutation rule thereby effectively mutating on a scheduled basis. The thing is we need the resource we're deleting to be available again or else the cleanup policy won't have anything to cleanup. With nothing to cleanup, there's no input trigger. What could we use? Well, how about a Pod spawned from a Deployment? When we delete a Pod owned by a controller such as a Deployment (actually, the ReplicaSet, the intermediary controller, is really the one responsible here), the desired state no longer matches the actual state and so another Pod will get brought online. That seems perfect! In total, the whole flow looks something like the following.

Now, let's put this in context with a real-world use case. We have some Deployments in a Namespace called saturn which are of a couple varieties. There are "experimental" or ephemeral Deployments alongside some applications which need to be perpetually available, including both infrastructure-level tools and customer-facing apps. We want to scale the experimental Deployments, indicated by the label env=dev, to zero every day at midnight but not touch any others. This may be a tiny bit contrived but it suffices to illustrate the concept.

First, we need to put some permissions in place. The manifest below includes two such permissions. The first is to allow Kyverno to delete Pods while the second allows it to mutate (i.e., update in this case) existing Deployments. Both of these are permissions Kyverno does not provide out-of-the-box.

1apiVersion: rbac.authorization.k8s.io/v1

2kind: ClusterRole

3metadata:

4 labels:

5 app.kubernetes.io/component: cleanup-controller

6 app.kubernetes.io/instance: kyverno

7 app.kubernetes.io/part-of: kyverno

8 name: kyverno:cleanup-pods

9rules:

10- apiGroups:

11 - ""

12 resources:

13 - pods

14 verbs:

15 - get

16 - list

17 - delete

18---

19apiVersion: rbac.authorization.k8s.io/v1

20kind: ClusterRole

21metadata:

22 labels:

23 app.kubernetes.io/component: background-controller

24 app.kubernetes.io/instance: kyverno

25 app.kubernetes.io/part-of: kyverno

26 name: kyverno:update-deployments

27rules:

28- apiGroups:

29 - apps

30 resources:

31 - deployments

32 verbs:

33 - update

Next, let's simulate one of those experimental Deployments. I'll just use a simple busybox app here so you get the picture. Notice how this has the label env=dev.

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 name: busybox

5 namespace: saturn

6 labels:

7 app: busybox

8 env: dev

9spec:

10 replicas: 2

11 selector:

12 matchLabels:

13 app: busybox

14 template:

15 metadata:

16 labels:

17 app: busybox

18 spec:

19 containers:

20 - image: busybox:1.35

21 name: busybox

22 command:

23 - sleep

24 - 1d

Now we get to the cleanup policy. This is a Namespaced CleanupPolicy which lives in the saturn Namespace and is configured to remove Pods beginning with the name cleanmeup which also have the label purpose=deleteme. As you can see, this is highly specific since we want to be very tactical with the Pods we clean up. I chose a Namespaced CleanupPolicy here but you could absolutely use a cluster-scoped ClusterCleanupPolicy instead but with an added clause which selects a specific Namespace.

1apiVersion: kyverno.io/v2alpha1

2kind: CleanupPolicy

3metadata:

4 name: clean

5 namespace: saturn

6spec:

7 match:

8 any:

9 - resources:

10 kinds:

11 - Pod

12 names:

13 - cleanmeup*

14 selector:

15 matchLabels:

16 purpose: deleteme

17 schedule: "0 0 * * *"

And now comes the Deployment supplying the Pod to be cleaned up. The purpose of this cleanmeup Deployment is solely for Kyverno's use to function as the input schedule trigger. Once its one and only replica is removed, it'll immediately spawn another ensuring that subsequent runs of the cleanup policy have work to do.

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 name: cleanmeup

5 namespace: saturn

6 labels:

7 purpose: deleteme

8spec:

9 replicas: 1

10 selector:

11 matchLabels:

12 purpose: deleteme

13 template:

14 metadata:

15 labels:

16 purpose: deleteme

17 spec:

18 containers:

19 - image: busybox:1.35

20 name: busybox

21 command:

22 - sleep

23 - 1d

And, finally, the mutation policy for existing resources is below. As you can see from the match block, we're very specifically matching only deletions on Pods named cleanmeup in the saturn Namespace. When such a deletion is observed, we want Kyverno to scale to zero any Deployments also in the saturn Namespace (although this could be anywhere in the cluster if we omitted the mutate.targets[].namespace field) if they have the label env=dev.

1apiVersion: kyverno.io/v2beta1

2kind: ClusterPolicy

3metadata:

4 name: mutate-existing

5spec:

6 rules:

7 - name: scale-dev-zero

8 match:

9 any:

10 - resources:

11 kinds:

12 - Pod

13 names:

14 - cleanmeup*

15 namespaces:

16 - saturn

17 operations:

18 - DELETE

19 mutate:

20 targets:

21 - apiVersion: apps/v1

22 kind: Deployment

23 namespace: saturn

24 patchStrategicMerge:

25 metadata:

26 labels:

27 <(env): dev

28 spec:

29 replicas: 0

With all the pieces in place, it's a simple matter of time. When the schedule occurs, Kyverno will delete the cleanmeup Pod which will trigger the mutation policy which will scale the matching Deployments to zero. This will run on a perpetual loop thanks to the cleanmeup Deployment respawning another Pod once the Kyverno cleanup controller deletes its managed Pod.

So, there you have it. Although it's a bit of a workaround, you can get scheduled mutations on existing resources with Kyverno. This could be useful in all sorts of ways from scaling to annotating/labeling to you name it. In a future blog, I'll show you a pretty cool use case for this ability which may really be of interest to platform teams. Until then.