Reloading Secrets and ConfigMaps with Kyverno

(This post first appeared on nirmata.com)

Policy is commonly thought of as being primarily (if not solely) useful in the area of security, blocking the "bad" while allowing the "good". This misconception is understandable because many tools which operate by implementing "policy" are often limited to these tasks and have no other abilities to exploit. But in reality, policy can be more versatile; it can be an extremely powerful tool for operations teams in order to automate common tasks in a declarative fashion, saving time and reducing risk.

If you've used Kubernetes for any length of time, chances are high you've consumed either a ConfigMap or Secret, someway and somehow, in a Pod. If not directly then certainly indirectly, possibly without your knowledge. They're both extremely common and useful sources of configuration data. Challenges arise however when you need to update that data yet you've already got running Pods. While there are some various little tools you can cobble together to make this happen, in this article I'll share how you can do this easier, better, define it all in policy as code, and consolidate all those tools down to one by using Kyverno.

ConfigMaps and Secrets can be consumed in a Pod in a number of different ways including as environment variables, volumes, image pull secrets, and others. The only consumption method which affords any kind of automatic reloading is when they're consumed as a volume so long as it isn't in a subPath. For the other consumption methods, for example environment variables, the only way to refresh their contents after they've been updated is to recreate the Pod. Doing this manually might be fine for one-off cases, but obviously doesn't scale. Although there are several small tools out there which can do this for you, the problem most (if not all) of them suffer is they still require the co-located ConfigMap or Secret to be updated first.

Pods and their ilk, just like Secrets and ConfigMaps, are Namespaced objects. A Pod in one Namespace cannot reference or consume either of those residing in another Namespace. This is an issue unto itself because ConfigMaps and Secrets are very often the source of data which needs to be consumed cluster wide. That is to say, even though the same ConfigMap might exist in multiple Namespaces, its content is the same. And, lastly, if that wasn't enough, you need to be careful with these types of Secrets and ConfigMaps. Someone mistakenly removes or alters their contents and boom, instant outage (or, at least, the next time the Pod gets restarted or recreated). So now you're up to three tools: one to reload them, one to sync them, and one to protect them. What if you could use one tool to do all of that? You can, and that tool is Kyverno.

Protecting Secrets and ConfigMaps

Secrets and ConfigMaps consumed by Pods have special status because those Pods are dependent upon their existence. At the next level down, the app running within the container is dependent upon the contents of that Secret or ConfigMap. Should the Secret or ConfigMap get deleted or altered, it can cause your app to malfunction or, worse, Pods to fail on start-up. For example, if you have a running Deployment which consumes a ConfigMap key as an environment variable and that ConfigMap is later deleted, a new rollout of that Deployment will cause Pods to fail as the kubelet cannot find the item. This prevents Pods from starting which fails the rollout. You now have to think about how you protect this dependency graph. Kubernetes RBAC only goes so far before it creates negative traction. Your cluster and Namespace operators need permissions to do their jobs but people still make mistakes (even if driven by automation). And while there's a nifty immutable option for both ConfigMaps and Secrets, it has some serious negative side effects if used. Aside from the obvious immutability preventing further updates, once that option is set it cannot be changed without recreating the resource. This is where Kyverno can step in and help. Kyverno can augment Kubernetes RBAC by limiting updates and deletes on a highly granular basis. For example, even if cluster operators have broad permissions to update or delete Secrets and ConfigMaps within the cluster, Kyverno can lock down specific resources based on name, label, user name, ClusterRole, and more.

Making Secrets and ConfigMaps Available

These API resources are Namespaced, which means they exist in one and only one Namespace. A Pod cannot cross-mount a Secret or ConfigMap from an adjacent Namespace. You need a solution to make these resources available to the Namespaces in which they're needed. Duplicating them, even with a GitOps controller, creates bloat and adds complexity. And what if you forget to make one of them available in a new Namespace? See the previous section on what happens. How about supplying them into existing Namespaces? Again, another point of complexity. Kyverno again solves this by using its unique generation capabilities. You can define and deploy a ConfigMap or Secret once in a Namespace and have Kyverno clone and synchronize it to not only new Namespaces but existing ones as well. Permit your Namespace administrators to delete them with no problem as Kyverno will restore the Secret or ConfigMap automatically by re-cloning it. All this is defined in a policy, dictated by you, declared with no knowledge nor use of a programming language.

Reloading Pods

Now you've got a handle on your Secrets and ConfigMaps, which is awesome. But your containers still don't know about the changes you've made to them. You now have a final concern which is how to intelligently and automatically reload those Pods so they pick them up. A few tools out there can do this, but they're point solutions. Once again, Kyverno can come to the rescue and in a declarative way. When the new changes are propagated to all those downstream ConfigMaps and Secrets, Kyverno can trigger a new rollout on the Pod controllers which consume them.

Putting it all together

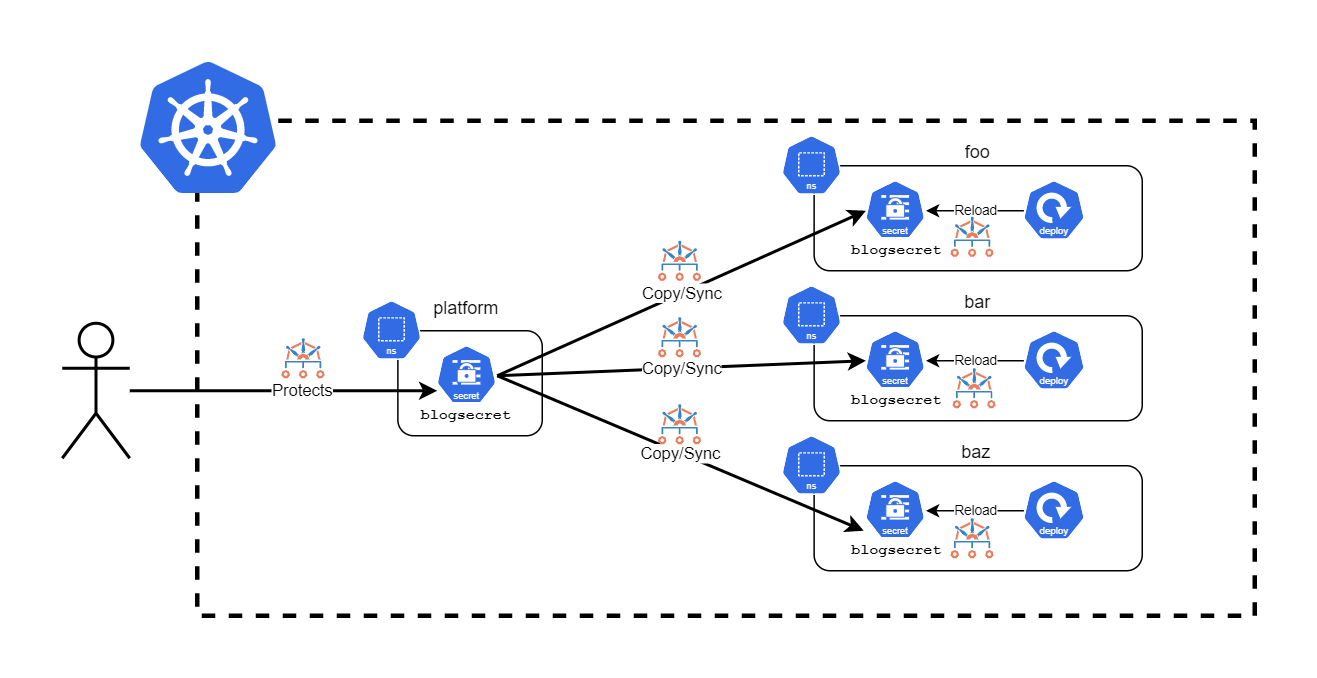

This is the architecture we'll put together illustrated below.

Let's walk through this before diving into the policies which enable the solution.

-

A Namespace called

platformcontains the "base" Secret (this article is written with a Secret in mind but it works equally for a ConfigMap) calledblogsecret. This is a simple string value but imagine it is, for example, a certificate or something else. We'll use a Kyvernovalidaterule here to ensure that this Secret is protected from accidental tampering or deletion by anyone who does not hold thecluster-adminrole or the Kyverno ServiceAccount itself. -

This Secret will be cloned to all existing Namespaces (except system-level Namespaces like

kube-*and that of Kyverno's) and also any new Namespaces that are created after the point at which the policy is created. Further updates toblogsecretwill be immediately propagated. And any deletion of this synchronized Secret into any of those downstream Namespaces will cause Kyverno to replace it from the base. -

Once the changes land in the cloned Secrets, Kyverno will see this and write/update an annotation to the Pod template portion of only the Deployments which consume this Secret, thereby triggering a new rollout of Pods.

Now let's look at the Kyverno policies which bring it all together.

Validation

1apiVersion: kyverno.io/v1

2kind: ClusterPolicy

3metadata:

4 name: validate-secrets

5spec:

6 validationFailureAction: enforce

7 background: false

8 rules:

9 - name: protect-blogsecret

10 match:

11 any:

12 - resources:

13 kinds:

14 - Secret

15 names:

16 - blogsecret

17 namespaces:

18 - platform

19 exclude:

20 any:

21 - clusterRoles:

22 - cluster-admin

23 - subjects:

24 - name: kyverno

25 kind: ServiceAccount

26 namespace: kyverno

27 preconditions:

28 all:

29 - key: "{{ request.operation }}"

30 operator: AnyIn

31 value:

32 - UPDATE

33 - DELETE

34 validate:

35 message: "This Secret is protected and may not be altered or deleted."

36 deny: {}

In this policy, we tell Kyverno exactly which Secret and in which Namespace we want to protect. The validationFailureAction mode here is set to enforce which will cause a blocking action to occur if anyone or anything other than the cluster-admin ClusterRole or the kyverno ServiceAccount attempts to change or delete it. Attempting to do so, if not allowed, will prevent the action and display the message:

1$ k -n platform delete secret/blogsecret

2Error from server: admission webhook "validate.kyverno.svc-fail" denied the request:

3

4resource Secret/platform/blogsecret was blocked due to the following policies

5

6validate-secrets:

7 protect-blogsecret: This Secret is protected and may not be altered or deleted.

Generation

1apiVersion: kyverno.io/v1

2kind: ClusterPolicy

3metadata:

4 name: generate-secret

5spec:

6 generateExistingOnPolicyUpdate: true

7 rules:

8 - name: generate-blogsecret

9 match:

10 any:

11 - resources:

12 kinds:

13 - Namespace

14 generate:

15 kind: Secret

16 apiVersion: v1

17 name: blogsecret

18 namespace: "{{request.object.metadata.name}}"

19 synchronize: true

20 clone:

21 name: blogsecret

22 namespace: platform

In the above policy, Kyverno is told to create and synchronize the blogsecret from the platform Namespace into existing and new Namespaces. The variable {{request.object.metadata.name}} here is used to apply to the Namespaces by name without selecting any one specifically.

Mutation

1apiVersion: kyverno.io/v1

2kind: ClusterPolicy

3metadata:

4 name: restart-deployment-on-secret-change

5spec:

6 mutateExistingOnPolicyUpdate: false

7 rules:

8 - name: reload-deployments

9 match:

10 any:

11 - resources:

12 kinds:

13 - Secret

14 names:

15 - blogsecret

16 preconditions:

17 all:

18 - key: "{{request.operation}}"

19 operator: Equals

20 value: UPDATE

21 mutate:

22 targets:

23 - apiVersion: apps/v1

24 kind: Deployment

25 namespace: "{{request.namespace}}"

26 patchStrategicMerge:

27 spec:

28 template:

29 metadata:

30 annotations:

31 ops.corp.com/triggerrestart: "{{request.object.metadata.resourceVersion}}"

32 (spec):

33 (containers):

34 - (env):

35 - (valueFrom):

36 (secretKeyRef):

37 (name): blogsecret

Finally, in this mutation policy, Kyverno is asked to watch for updates to the blogsecret in the Namespace in which the updates occur and mutate any Deployment that happens to consume blogsecret in an environment variable using a secretKeyRef reference. Once all that is true, it will add an annotation called ops.corp.com/triggerrestart to the Pod template portion of the spec containing the resourceVersion information from that Secret. This is so there is always some unique information available in order to provide a valid update to the Deployment. And, by the way, adding an annotation is also what happens when performing kubectl rollout restart except the key kubectl.kubernetes.io/restartedAt will be added with the time.

Demo

Rather than show a ton of step-by-step terminal output, I figured it'd be nicer to record this one. This is a real-time demo just to show I'm not trying to hide anything.

So that's it. Hopefully this idea produced a "that's cool!" response from you as this combination of abilities can be used to solve all sorts of use cases beyond what most admission controllers can provide. It also perfectly highlights one real-world (and common) scenario in which policy is not just a security feature but can be a powerful ally for operations used to build some fairly complex automation. Kyverno enables all of this, and more, with simple policies which can be understood and written by anyone in a matter of minutes with neither knowledge nor use of a programming language.

Thanks for reading and feedback and input is always welcome. Feel free to hit me up on Twitter, LinkedIn, or come by the very active #kyverno channel on Kubernetes Slack.