Temporary Policy Exceptions with Kyverno

(This post first appeared on nirmata.com)

One of the great new features in the recently-released Kyverno 1.9 is something we introduced called Policy Exceptions which decouples the policy itself from the workloads to which it applies. But what if you only want to enable policy exceptions for a brief period of time? Here, I will show how you can combine policy exceptions with other Kyverno features such as the new cleanup policies and time-oriented JMESPath filters to make a customized expiration date system for your exceptions.

Policy Exceptions are a way to provide even more control over which resources get excluded from the scope of a policy but, most importantly, they allow decoupling of the policy from those exclusions. With Policy Exceptions, policy authors can be a separate team from those who might request an exception. Granting that exception can be done through a standalone resource and doesn't require modifying the policy itself. This allows very powerful and flexible scoping and is an assistive device for ops teams and other users of Kubernetes as well since they can create an exception (based on your controls) and have that apply as a totally separate process. They don't even need to have access to see the policies for which they're writing an exception.

Having this ability is a boon for multiple teams. But in cases where you do want to allow "regular" Kubernetes users to create policy exceptions for one-off or special circumstances (think break-glass situations like outages), those exceptions should be short lived. For example, let's say you have a policy which prevents Pods from running with host namespaces. That is an important policy to the overall security of the cluster. There might be cases where this restriction needs to be lifted temporarily but for a very specific resource such as a troubleshooting Pod which needs access to the underlying host. This could be a legitimate reason for allowing said Pod past the policy. But once the problem has been resolved, that exception is no longer needed and so should be removed. This is where another seminal feature of Kyverno 1.9 comes into play: cleanup policies.

Cleanup policies allow Kyverno to delete resources based upon a fine-grained selection on a recurring time period. Since cleanup policies are just another custom resource, a Kyverno generate rule is able to create them just like any other Kubernetes resource. I'll be covering cleanup policies in more depth in a future post.

Policy Exceptions are a new alpha-level feature and must first be enabled once you have Kyverno 1.9 up and running. See the documentation on the necessary flag to pass for that. Once activated, unless you've also used the --exceptionNamespace flag to restrict where Policy Exceptions can be created, they are available cluster wide. One of the first things you must do in this situation is to decide how you will gate the process of allowing Policy Exceptions into the cluster, and the documentation outlines several possibilities for that. At a minimum, it should be controlled with Kubernetes RBAC. It's also possible to use your GitOps systems and processes as a form of approval workflow. Another natural desire may be to put limitations around what the Policy Exception can look like. Since Policy Exceptions can apply to multiple policies and multiple rules as well as have access to the full power of the match/exclude block, you might want to determine how specific a Policy Exception has to be in your environment. For example, it probably wouldn't make sense to allow a developer to be able to create a Policy Exception which allows all Pods to be exempt from a ClusterPolicy which also matches on all Pods. Such an exception would basically negate the existence of that policy. Since a Policy Exception is just another Kubernetes custom resource, you can apply Kyverno validate rules to them. Here's an example which contains several individual rules from which you can choose the ones that make sense to you.

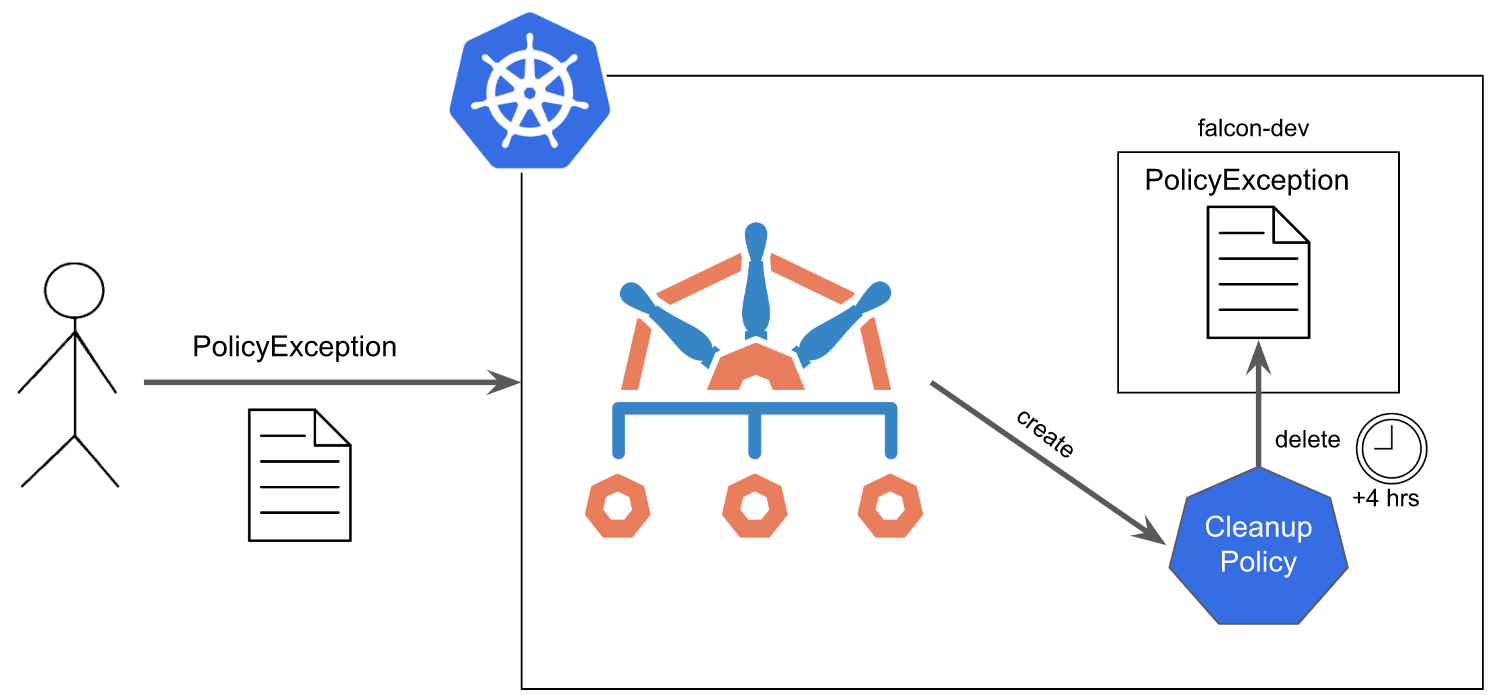

Now that you've got the procedural things out of the way, we can begin to create the necessary components to enable the expiration date for Policy Exceptions. Here's what the full solution would look like below. The workflow is the following:

- User creates a Policy Exception in the

falcon-devNamespace. - Once it passes validation (if any), it becomes persisted in the cluster.

- Kyverno observes this creation and responds by generating a ClusterCleanupPolicy which is set to remove this Policy Exception four hours from now.

- Once the expiration date is reached, Kyverno removes the Policy Exception.

We need to first grant some additional permissions to Kyverno so it can both create the cleanup policy and delete the Policy Exception. Since Kyverno uses ClusterRole aggregation, this process is simple and efficient without requiring modification of any of the stock ClusterRoles.

1# allow a Kyverno generate rule to create ClusterCleanupPolicies

2apiVersion: rbac.authorization.k8s.io/v1

3kind: ClusterRole

4metadata:

5 labels:

6 app.kubernetes.io/instance: kyverno

7 app.kubernetes.io/name: kyverno

8 app: kyverno

9 name: kyverno:create-cleanups

10rules:

11- apiGroups:

12 - kyverno.io

13 resources:

14 - clustercleanuppolicies

15 verbs:

16 - create

17 - get

18 - list

19 - update

20 - delete

21---

22# allow the Kyverno cleanup controller to remove PolicyExceptions

23apiVersion: rbac.authorization.k8s.io/v1

24kind: ClusterRole

25metadata:

26 labels:

27 app.kubernetes.io/component: cleanup-controller

28 app.kubernetes.io/instance: kyverno

29 app.kubernetes.io/name: kyverno-cleanup-controller

30 name: kyverno:cleanup-controller-polex

31rules:

32- apiGroups:

33 - kyverno.io

34 resources:

35 - policyexceptions

36 verbs:

37 - list

38 - delete

To do the actual work, we'll use a generate rule which creates the ClusterCleanupPolicy for us. The ClusterCleanupPolicy is cluster-scoped and allows Kyverno to remove any resource anywhere in the cluster. Let's walk through this policy below.

When any PolicyException comes in, Kyverno will generate a ClusterCleanupPolicy with the name consisting of the Namespace name where the Policy Exception was created, the name of the Policy Exception itself, and an eight-character long random string to add some uniqueness. Since cleanup policies require a schedule in cron format, Kyverno will take the current time and add four hours to that, converting the final time into cron format. A label will also be written to the ClusterCleanupPolicy so it can easily be identified, and it will match only on the specific Policy Exception which was created at the beginning of this flow.

1apiVersion: kyverno.io/v2beta1

2kind: ClusterPolicy

3metadata:

4 name: automate-cleanup

5spec:

6 background: false

7 rules:

8 - name: cleanup-polex

9 match:

10 any:

11 - resources:

12 kinds:

13 - PolicyException

14 generate:

15 apiVersion: kyverno.io/v2alpha1

16 kind: ClusterCleanupPolicy

17 name: polex-{{ request.namespace }}-{{ request.object.metadata.name }}-{{ random('[0-9a-z]{8}') }}

18 synchronize: false

19 data:

20 metadata:

21 labels:

22 kyverno.io/automated: "true"

23 spec:

24 schedule: "{{ time_add('{{ time_now_utc() }}','4h') | time_to_cron(@) }}"

25 match:

26 any:

27 - resources:

28 kinds:

29 - PolicyException

30 namespaces:

31 - "{{ request.namespace }}"

32 names:

33 - "{{ request.object.metadata.name }}"

Now, with all this in place, let's test this out by creating some Policy Exception. I'll create the one below which allows a specific Pod named emergency-busybox in the falcon-dev Namespace created by a user named chip to be exempted from the host-namespaces rule contained within the disallow-host-namespaces ClusterPolicy.

1apiVersion: kyverno.io/v2alpha1

2kind: PolicyException

3metadata:

4 name: emergency-busybox

5 namespace: falcon-dev

6spec:

7 exceptions:

8 - policyName: disallow-host-namespaces

9 ruleNames:

10 - host-namespaces

11 match:

12 any:

13 - resources:

14 kinds:

15 - Pod

16 names:

17 - emergency-busybox

18 namespaces:

19 - falcon-dev

20 subjects:

21 - kind: User

22 name: chip

As a user, I can now create the Pod that would have normally been blocked.

1$ kubectl -n falcon-dev run emergency-busybox --image busybox:1.28 --overrides '{"spec":{"hostPID": true}}' -- sleep 1d

2

3pod/emergency-busybox created

Let's check the ClusterCleanupPolicy count to see what happened.

1$ kubectl get clustercleanuppolicies

2

3NAME SCHEDULE AGE

4polex-falcon-dev-emergency-busybox-y8mnuyyb 24 19 11 2 6 87s

Awesome! We can see it generated a proper ClusterCleanupPolicy for us. Let's inspect its contents.

1apiVersion: kyverno.io/v2alpha1

2kind: ClusterCleanupPolicy

3metadata:

4 creationTimestamp: "2023-02-11T15:24:31Z"

5 generation: 1

6 labels:

7 app.kubernetes.io/managed-by: kyverno

8 kyverno.io/automated: "true"

9 kyverno.io/generated-by-kind: PolicyException

10 kyverno.io/generated-by-name: emergency-busybox

11 kyverno.io/generated-by-namespace: falcon-dev

12 policy.kyverno.io/gr-name: ur-dpfvn

13 policy.kyverno.io/policy-name: automate-cleanup

14 policy.kyverno.io/synchronize: disable

15 name: polex-falcon-dev-emergency-busybox-y8mnuyyb

16 resourceVersion: "1601278"

17 uid: 8323279e-18a2-41f6-afc7-44a74db8f240

18spec:

19 match:

20 any:

21 - resources:

22 kinds:

23 - PolicyException

24 names:

25 - emergency-busybox

26 namespaces:

27 - falcon-dev

28 schedule: 24 19 11 2 6

As we can see, the contents of this cleanup policy were generated according to the templated information in the rule. Notice that the schedule here is four hours from now (converted from UTC time).

So all that's left at this point is to let your user perform their troubleshooting and go about your business. When the expiration date is reached, Kyverno deletes the Policy Exception so no more of these named Pods can be allowed to bypass the rule, restoring your policy to its previous state all while not having to lay a finger on it.

You can imagine at this point how useful not only Policy Exceptions can be but how multiple other Kyverno capabilities can all be brought to bare to really improve your life as a platform engineer. With tools like these in your toolbox, there's very little you can't accomplish so long as you can dream it up.

If you liked this post, I'm always glad to hear feedback so feel free to drop me a note on Twitter or come find me on Slack.